Cloudera moves to enhance GenAI with NVIDIA NIM Microservices

Transformative GenAI applications span many uses

Cloudera, the California-based hybrid data company for trusted enterprise AI, has partnered strategically with NVIDIA, the world leader in AI computing, to bolster generative AI (GenAI) capabilities.

The newly announced collaboration sees the integration of enterprise-grade NVIDIA NIM Microservices into Cloudera Machine Learning, a service within the Cloudera Data Platform tailored for AI and ML workflows.

The integration streamlines and fortifies end-to-end generative AI workflows in production environments.

Integrating NVIDIA NIM Microservices into Cloudera’s ecosystem – 25 exabytes under management – marks a significant milestone in empowering organisations across industries to build, customise, and deploy large language models (LLMs) for transformative generative AI applications.

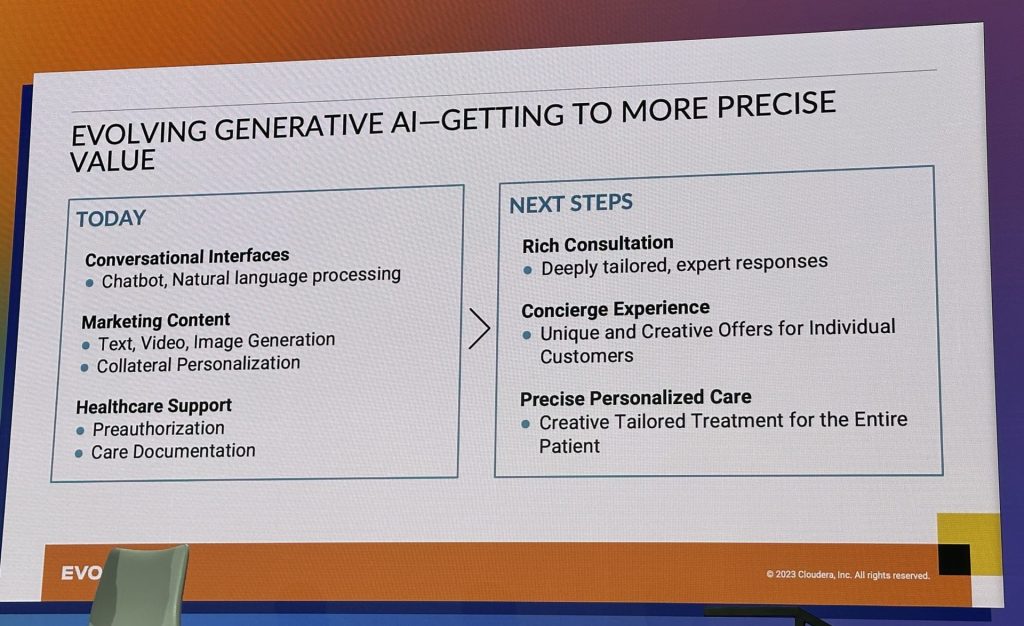

These applications span many use cases, including coding co-pilots for accelerated development, chatbots for automating customer interactions, text summarisation apps for efficient document processing, and contextual search enhancements.

Powerful tools

By combining enterprise data with NVIDIA NIM Microservices, developers gain access to powerful tools that facilitate the linkage of AI models to business data, generating highly accurate and contextually relevant responses.

These responses can span various data formats, including text, images, and visualisations like bar graphs and pie charts.

With the addition of NVIDIA NIM Microservices and integration with NVIDIA AI Enterprise, Cloudera Data Platform aims to deliver streamlined end-to-end hybrid AI pipelines.

One key highlight of the new collaboration is providing model and application-serving capabilities powered by NVIDIA NIM Microservices.

This enhancement promises to bolster model inference performance across all workloads, ensuring fault tolerance, low-latency serving, and auto-scaling for models deployed across public and private clouds.

(RAG)-based production

In addition, Cloudera’s integration with NVIDIA NeMo Retriever microservices simplifies the connection of custom LLMs to enterprise data. This functionality facilitates the development of retrieval-augmented generation (RAG)-based production applications.

The partnership also builds upon Cloudera’s previous efforts with NVIDIA, including integrating the NVIDIA RAPIDS Accelerator for Apache Spark into the Cloudera Data Platform.

Priyank Patel, Vice President of AI/ML Products at Cloudera, expressed confidence in the integration, stressing its potential to translate AI concepts into tangible business outcomes.

Justin Boitano, Vice President of Enterprise Products at NVIDIA, also highlighted the integration’s role in facilitating the deployment of LLMs to drive business transformation.

These enhanced AI capabilities were unveiled at the NVIDIA GTC developer conference (March 18-21, 2024), a premier AI and accelerated computing event in San Jose, California.

With Cloudera and NVIDIA’s collaboration, enterprises are poised to unlock new possibilities in generative AI, accelerating their journey towards data-driven insights and business success.

Featured image: Priyank Patel presents the Cloudera Data Platform tailored for AI and ML workflows at EVOLVE 2023 in New York City on November 1, 2023. Credit: Arnold Pinto

Last Updated on 11 months by Arnold Pinto