Cloudera launches disruptive AI Inference platform

Ensuring safeguarding sensitive data

Cloudera has launched Cloudera AI Inference, powered by NVIDIA NIM microservices. This innovative service significantly advances deploying and managing large-scale AI models, propelling enterprises from initial pilot phases to full-scale production.

New research from Deloitte highlights that compliance risks and governance issues are the primary obstacles hindering GenAI adoption among enterprises. Nevertheless, over two-thirds of organisations have increased their GenAI budgets in the third quarter of 2024, signalling a robust demand for practical solutions.

To address these concerns, businesses are shifting towards private models and applications, whether hosted on-premises or in public clouds, necessitating secure and scalable infrastructures.

Cloudera AI Inference is designed to safeguard sensitive data by ensuring that development and deployment remain within enterprise control. The platform facilitates the creation of trustworthy data for reliable AI applications and boasts high-performance speeds.

This efficiency is crucial for developing AI-driven chatbots, virtual assistants, and other applications that enhance productivity and drive new business opportunities.

EVOLVE NY showcase

The new Cloudera AI Inference capabilities are showcased at Cloudera’s premier AI and data conference, Cloudera EVOLVE NY (October 10, 2024). This event in New York City highlights Cloudera’s commitment to elevating enterprise data from pilot initiatives to production-ready GenAI solutions.

Dipto Chakravarty, Chief Product Officer of Cloudera, noted: “We are excited to bring Cloudera AI Inference to market, providing a single AI/ML platform that supports nearly all models and use cases.”

Kari Briski, Vice President of AI Software at NVIDIA, added: “By incorporating NVIDIA NIM microservices into Cloudera’s AI Inference platform, we’re empowering developers to create trustworthy generative AI applications easily.”

Strategic collaboration

The launch aligns with Cloudera’s strategic collaboration with NVIDIA, reinforcing the company’s commitment to driving AI innovation when many industries grapple with digital transformation and AI integration challenges.

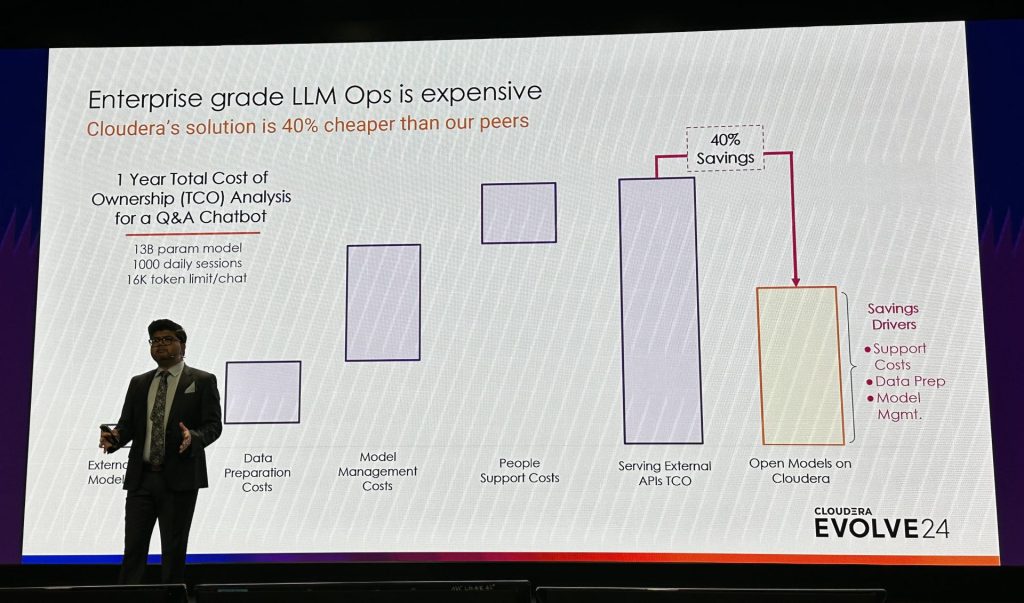

Developers can now leverage NVIDIA Tensor Core GPUs to build and customise enterprise-grade large language models (LLMs) with performance enhancements up to 36 times faster than previous standards and close to four times the throughput of traditional CPUs.

Cloudera AI Inference offers a seamless user experience, directly integrating user and application programming interfaces (APIs) with NVIDIA NIM microservice containers. This integration eliminates the cumbersome need for command-line interfaces (CLI) and independent monitoring systems, providing a unified platform for managing all models—LLMs or traditional formats.

Key features of Cloudera AI Inference include several advanced capabilities aimed at optimising AI applications. The service offers advanced AI capabilities, allowing users to optimise open-source large language models (LLMs) like LLaMA and Mistral, facilitating breakthroughs in natural language processing (NLP), computer vision, and other AI fields.

Additionally, Cloudera AI Inference supports hybrid cloud and privacy, enabling workloads to run either on-premises or in the cloud. This feature is enhanced by virtual private cloud (VPC) deployments, which provide increased security and ensure regulatory compliance.

The platform also emphasises scalability and monitoring, incorporating auto-scaling and high availability (HA) functionalities. These capabilities and real-time performance tracking facilitate efficient resource management and prompt issue detection.

Moreover, it provides open APIs and CI/CD integration, granting access to standards-compliant APIs for seamless integration with continuous integration and continuous deployment (CI/CD) pipelines and machine learning operations (MLOps).

Headquartered in San Jose, California, Cloudera is a leading hybrid platform for data, analytics, and AI, managing 100 times more data than typical cloud-only providers. This capability enables enterprises worldwide to convert various data types across public and private clouds into insightful, trustworthy information.

Featured image: Charles Sansbury, CEO of Cloudera, addresses partners, clients and regional data industry professionals at EVOLVE24, held at the Museum of the Future in Dubai City on September 12, 2024. Credit: Arnold Pinto

Last Updated on 4 months by Arnold Pinto